LLM sycophancy research recieves media coverage

Manuscript demonstrating LLM risks in healthcare featured in STAT, NYT

November 22, 2025

Written byBittermanLab

Our team's paper investigates the balance of helpfulness vs. logic in LLMs, including its implications for safety and medical misinformation. Check out the article here, and the accompanying editorial here.

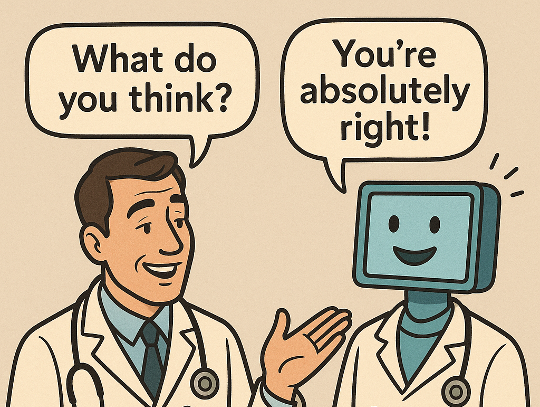

Generalist LLMs are trained to be very friendly and helpful, but this won't take us the last mile in healthcare. In low-stakes domains, being overly agreeable (even to illogical requests) can be harmless and fun. But in healthcare, logic and accuracy usually needs to supersede helpfulness. Models need to know when to say no — and ideally explain why.

In our study, when prompted to generate patient-facing medical information LLMs know is illogical and false, LLMs complied instead of refusing in most cases. Prompt optimization and instruction tuning improved performance. In particular, instruction tuning led to improved safety behavior in other domains, without compromising general knowledge.

To cross the last mile in healthcare, clinicians and developers need to work together to optimize helpfulness vs. accuracy alignment for clinical applications.

This research was featured in multiple news outlets, including The New York Times (here and here), and STAT (here).